A perceptron is one of the earliest and most basic types of artificial neural networks. It was introduced by Frank Rosenblatt in 1957 and is designed to perform binary classification tasks.

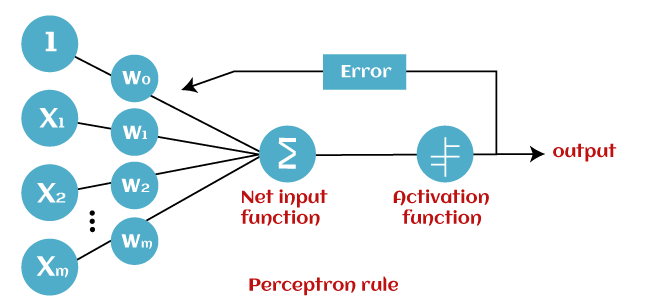

The perceptron neural network consists of a single neuron with adjustable synaptic weights and a bias (or threshold) value. The neuron receives multiple input signals, which are multiplied by their respective weights, and these products are then summed together. The sum is then compared to the bias value, and if it exceeds the bias, the neuron fires (outputs a value of 1); otherwise, it does not fire (outputs a value of 0).

[image credits javatpoint]

The perceptron learns by adjusting its weights and bias value through a process called the perceptron learning rule or the perceptron training algorithm. This algorithm works as follows:

- Initialize the weights

w_iand biasbto small random values. - For each training example

(x_1, x_2, ..., x_n, t), wheretis the target output (0 or 1): a. Calculate the actual outputyof the perceptron using the current weights and bias. b. Update the weights and bias based on the error between the actual outputyand the target outputt: – Ify!=t, update the weights and bias:w_i = w_i + η * (t - y) * x_ib = b + η * (t - y)– Ify==t, do not update the weights and bias. - Repeat step 2 for all training examples until convergence (i.e., until the perceptron correctly classifies all training examples).

Here, η is the learning rate, a positive value that controls the step size of the weight updates.

The perceptron is capable of learning linearly separable patterns, which means that if the input data points can be separated by a single straight line (or hyperplane in higher dimensions), the perceptron will be able to learn the decision boundary and correctly classify new inputs. However, if the data is not linearly separable, the perceptron will fail to converge and cannot correctly classify all the training examples.

Despite its simplicity, the perceptron laid the foundation for more complex neural network architectures and was a significant milestone in the development of artificial neural networks and machine learning.